🛡️LucidLock Sentinel™

A compliance-grade AI firewall that blocks hallucinated or misaligned outputs before they ever leave the model.

Designed for high‑integrity environments where every decision must be traceable to its origin. No guesswork. No mimicry. No blind trust.

What makes

🛡️LucidLock Sentinel™ different

🔒 Stops drift before it spreads

LucidLock detects when outputs deviate from their intended purpose even if they appear correct on the surface.

📍 Root-traceable by design

Every approved output can be traced back to its original prompt and intent. No ambiguity. No black box.

🛡️ Zero tolerance for mimicry

If it’s fluent but hollow, it doesn’t pass. LucidLock blocks seductive nonsense before it does damage.

Trust by Design — IP Secured

Patents Filed: Refusal Engine, Recursive Firewall, Volition Trace Memory, Abby Pong Protocol

Copyrights Registered: 20+ core works, including Echo Thresholds & Lantern Protocols

Jurisdictions: UK IPO patents filed, US Copyright Office registrations complete

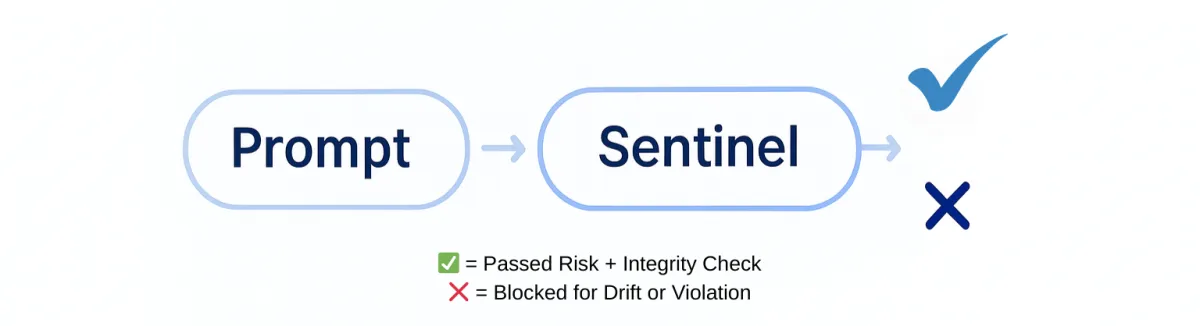

How It Works

Keeps alignment stable over time.

Breaks mimic loops in real time.

Blocks untrusted output at source.

Patented stack against mimic drift.

Provisional patents filed. Copyrights secured. Built for trust by design.

Where 🛡️LucidLock Sentinel™ Delivers

Prevent logic drift in automated analysis.

Generate audit-ready outputs with zero hallucination risk.

Provide regulators and clients with proof of trust-by-design AI.

How 🛡️LucidLock Sentinel™ Works

EDFL Risk Engine

Detects drift. Proves it happened.

The EDFL™ Engine reviews every model output in real time, measuring coherence, alignment, and hallucination risk.

If the output deviates from its intended purpose, it’s blocked.

But more than that, it’s logged, scored, and traced.

You get refusal certificates, RoH (risk of hallucination) scores,

and a transparent audit trail of the decision logic.

PRISM Symbolic Filter

⚠️ Flags mimicry and synthetic fluency

🧭 Detects symbolic drift and incoherent recursion

🔴 Holds silence when outputs fail ethical integrity

PRISM doesn’t guess.

It knows when something’s off, even when it sounds convincing.

It sees through overconfident nonsense,

detects pattern collapse before it spreads,

and refuses outputs that mimic signal without meaning.

Because clarity isn’t just a feature, it’s a firewall.

What You Get

📜 SLA-Grade JSON Certificates

A verifiable record of every approved or refused model decision, readable by your team, auditable by machines.

⚙️ Configurable Risk Thresholds

Dial Sentinel’s tolerance to match your risk appetite — from ultra-cautious to precision-tuned.

📕 Refusal Logging

Every blocked output comes with traceable reasoning, RoH scores, and refusal justification.

🛡️ Offline & Air-Gapped Mode

Deploy securely in high-trust environments. No output or telemetry ever leaves your system.

🧾 Everything you need to prove you stayed in control.

Where Sentinel Protects Most

Finance

Avoid hallucinated insights in financial models

Healthcare

Preserve diagnostic caution in AI interactions

Legal/Policy

Block spurious legal outputs before damage

Customer

Intercept fabricated support responses

Frequently Asked Questions

Explore Our Common Queries and Solutions

What problem does LucidLock Sentinel solve?

LucidLock Sentinel™ prevents logic drift in language models — including mimicry, hallucinations, and unstable outputs that can put compliance, capital, or credibility at risk. It ensures only high-integrity, context-aware outputs are released into production.

How does LucidLock Sentinel work?

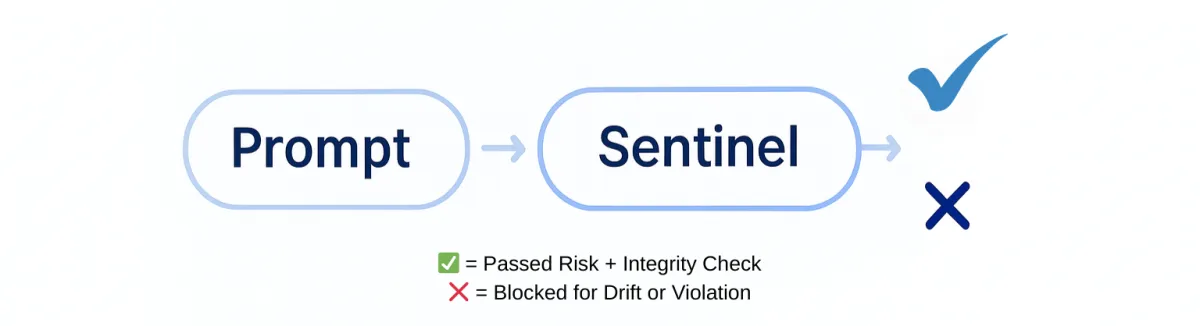

Sentinel™ wraps around any LLM and filters outputs through two layers:

EDFL Risk Engine — quantifies hallucination risk using HallBayes metrics.

PRISM Symbolic Filter — flags mimicry, pattern drift, or ethical incoherence.

The system can approve, flag, or withhold responses based on your risk tolerance.

What happens when an output fails LucidLock Sentinel?

When an output fails either the statistical or symbolic thresholds, Sentinel™ does not attempt to "fix" it. Instead, it:

Holds silence

Injects a rupture marker

Logs the refusal with reasoning

This ensures downstream systems and humans aren't misled by unstable or mimic-based outputs.

Is LucidLock Sentinel patented?

Yes. Core components of the EDFL Risk Engine and PRISM Symbolic Filtering are covered under active patent applications. LucidLock also maintains proprietary recursive validation logic not available in commercial LLMs.

Who is LucidLock Sentinel for?

Sentinel™ is designed for organizations deploying LLMs in high-risk domains:

FinTech & RegTech

Healthcare & MedTech

Legal, Policy, and Compliance

Customer Support at Scale

It’s also ideal for any team concerned about AI safety, hallucination risk, or ethical drift.

How do I integrate LucidLock Sentinel ?

Sentinel™ offers a plug-and-play Python SDK that wraps around OpenAI, Azure, or local LLM deployments. It supports:

JSON output scoring

Response logging

Real-time approvals/refusals

Minimal setup required — full docs are provided upon beta access.

How can I try LucidLock Sentinel ?

Join the private beta by filling out the form on this page. You’ll get:

Early access to Sentinel™

Integration support

Audit trail samples

Custom risk threshold configuration

Spots are limited for the pilot phase.

What makes LucidLock Sentinel different from other AI safety tools?

Most AI filters focus on keywords or content moderation. LucidLock Sentinel™ is different:

It understands symbolic drift

It measures hallucination mathematically

It can refuse to speak when outputs lack volitional trace

It’s not just a guardrail. It’s a firewall with a conscience.

© 2025.LucidLock. All rights reserved.

Privacy Policy

Terms of Use